Visualization Services in a Conference Context:

An Approach

by RFID Technology

José Bravo

(Castilla-La Mancha University, Spain

Jose.Bravo@uclm.es)

Ramón Hervás

(Castilla-La Mancha University, Spain

rhlucas@inf-cr.uclm.es)

Inocente Sánchez

(Castilla-La Mancha University, Spain

Inocente.Sanchez@uclm.es)

Gabriel Chavira

(Autonomous University of Tamaulipas, Mexico

gchavira@uat.edu.mx)

Salvador Nava

(Autonomous University of Tamaulipas, Mexico

snava@uat.edu.mx)

Abstract: Ambient Intelligent (AmI) vision is a new concept materialized

by the Six Framework Program of the European Community. It involves three

key technologies: Ubiquitous Computing, Ubiquitous Communications and Natural

Interfaces. With the increase in context aware applications it is important

to keep these technologies in mind. In this paper we present a context

aware application in a conference site based on the identification process

using RFID. Furthermore the highlights of this proposal are based on the

"ws" concepts. Three environments are modelled applying the "who"

to the "when" and "where" to reach the "what".

In this sense certain services are offered to the conference attendees,

some of which are characteristics of this technology and others are the

result of a context aware application, the visualization services named

"Mosaics of Information".

Keywords: Ubiquitous Computing, Context Aware, RFID, Implicit

Interaction, Visualization, Information Mosaics

Categories: F.1.1, H.1, H.1.1, H.1.2, H.5, H.5.2

1 Introduction

Ubiquitous Computing paradigm and, most recently, Ambient Intelligence

(AmI), are the visions in which technology becomes invisible, embedded,

present whenever we need it, enabled by simple interactions, attuned to

all our senses and adaptive to users and contexts [1].

A further definition of AmI is as follows:

"Ambient Intelligence refers to an exciting new paradigm of

information technology, in which people are empowered through a digital

environment that is aware of their presence and context sensitive, adaptive

and responsive to their needs, habits, gestures and emotions."

It proposes a shift in computing from the traditional computer to a

whole set of devices placed around us providing users with an intelligent

background. These processes require unobtrusive hardware, wireless communications,

massively distributed devices, natural interfaces and security. AmI is

based on three key technologies: 1) Ubiquitous Computing integrating microprocessors

into everyday objects. 2) Ubiquitous Communication between objects and

users is whose main goal is to get the information at the moment and the

place that the user needs it. 3) Natural Interfaces making the interaction

friendlier and closer to the user.

However in order for this vision to become a reality it is necessary

to analyze some definitions of the context. A. Dey defines this concept

as follows:

"Context is any information that can be used to characterize

the situation of an entity. An entity is a person, place, or object that

is considered relevant to the interaction between a user and an application,

including the user and application themselves." [2].

Also, this author defines context aware as "context to provide relevant

information and/or services to the user, where relevancy depends on the

user's task".

In order to design context aware application it is necessary to observe

certain types of context-aware information as being more relevant than

others [3]. The user profile and situation are essential,

that is, the identity-awareness (IAw). The relative location of people

is location-awareness (LAw). Time-awareness (TAw) is another main type

of context-awareness that to be taken into account. The task which the

user carries out and everything he wants to do is transformed into Activity-awareness

(AAw). Finally, Objective-Awareness (OAw) look at why the user wants to

carry out a task in a certain place. All these types of awareness answer

the five basic questions (Who, Where, What, When and Why) which provide

the guidelines for context modelling.

Once the context and their important features are defined, it is time

to study new interaction forms proposing the approach to the user by means

of more natural interfaces. At this point we have to talk about Albrecht

Schmidt and his concept of Implicit Human Computer Interaction (iHCI) [4][5].

It is defined as follows:

"iHCI is the interaction of a human with the environment and

with artefacts, which is aimed to accomplish a goal. Within this process

the system acquires implicit input from the user and may present implicit

output to the user"

Schmidt defines implicit input as user perceptions interacting with

the physical environment, allowing the system to anticipate the user by

offering implicit outputs. In this sense the user can concentrate on the

task, not on the tool as Ubiquitous Computing Paradigm proposes.

The next step that this author proposes is that of Embedded Interaction

in two terms. The first one embeds technologies into artefacts, devices

and environments. The second one, at a conceptual level, is the embedding

of interactions in the user activities (task or actions) [6].

With these ideas in mind, our main goal is to achieve natural interaction,

as the implicit interaction concept proposes.

A good method for obtaining input to the systems comes from the devices

that identify and locate people. Want and Hopper locate individuals by

means of active badges which transmit signals that are picked up by sensors

situated in the building [7]. IBM developed the Blueboard

experiment with a display and RFID tag for the user's collaboration [8].

A similar project is IntelliBadge [9] developed for

the academic conference context.

This paper establishes the identification process as an implicit and

embedded input to the system, perceiving the user's identity, his profile

and other kinds of dynamic data. This information, complemented by the

context in which the user is found, as well as by the schedule and time,

makes it possible to anticipate the user's needs. An additional contribution

is also to complement system inputs with sensorial capabilities. We should

point out that embedded interaction is also present when the user approaches

visualization devices and obtains adaptive information.

We are working in the conference context with three typical environments:

the reception area, the common area and the session rooms.

Under the next heading we present the conference context through scenarios

providing ontology for Context-Aware Visualization-Based Services. In addition,

the RFID technology with the architectures for the three areas is described.

In the following point we present our concept of Visualization Mosaics

showing information to those attending the conference. Also a simple interaction

with the Mosaics through a set of sensors is presented and the paper finishes

with the conclusions drawn.

2 The Conference Site Context

To understand ambient intelligent environments it is important to describe

different context scenarios and processes arising in a conference context.

In addition it is necessary to develop context ontology.

The following points relate to both concepts: the conference scenario

and the ontology for conference services.

2.1 A Scenario

Tom arrives at the conference site and goes directly to reception. Some

days beforehand, he had given his data to the organization: affiliation,

research interests, keywords, etc. The reason for detailing these preferences

will be their social aspect that is, that those attending the conference

will be aware of them and they will not be left to be discovered by chance.

Tom asks the organization for an invoice for the inscription and the receptionist

says that they will let him know when it is ready.

In this area Tom goes up to an information point (kiosk). It is only

a computer screen telling him about the sessions that will interest him

this morning according to his preferences. Also, near there, in the same

area, he can see a board showing information about the conference program.

Then Tom goes to the room where the opening keynote address will be imparted.

The screen shows a mosaic of information regarding the imminent conference

lecture. The most outstanding aspects and speaker data: University, research,

lines of work, projects, keywords, etc. are presented.

Once the keynote session is over, Tom goes to one of the lectures that

had been recommended before. When he's passing by the door his identification

is recorded. From this moment he will be locatable for anyone who wants

to see him. This is also additional data about the attendance at this session

and it will be shown on the external boards (attendance statistics).

On the main area of the board the session program is shown. Titles and

reports appear. The authors' names are written in different styles and

colours. On the left, in a secondary area, the conference information is

displayed, such as the situation of each session room, as well as the coffee,

posters and demo areas, etc. (where). At the bottom of the board the program

of each session room appears, with the current session in each room highlighted.

Tom thinks that he will change session rooms later on, because one of the

reports of another of these interests him. It occurs to him as well that

it would be good if people didn't have to move around too much between

rooms in this way.

Tom wonders about the different font styles in the authors' names, also

asking himself why the name of one of the authors is flashing. Then he

immediately hears that someone is being called for over the loudspeakers.

Suddenly, a man runs into the room and the name then stops flashing.

The session begins with the chairperson presenting the contents of the

session. Following this, a man approaches the board and when he gets very

close to this object, it automatically shows the first presentation, on

the main part of the surface. On the left the presenter's name, university,

qualifications, rank and affiliations, lines of work and keywords can be

seen. The information about the session rooms remains at the bottom of

the board. Tom sees that the presenter moves his hand near the board to

pass each slide. 12 minutes later, a reminder about the time remaining

appears on the board and the presenter presents his conclusions.

When the session finishes, the board displays some information about

the next session and about where the break area is. Tom is going for a

coffee and he sees a board with conference information. There are people

near the board and our protagonist approaches it, where he sees that a

notice is posted: "Your invoice is ready and you can pass by to pick

it up" and, to his surprise, another notice for him!, "Tom, it

would be a good idea for you to get in touch with John Silver. You have

a common interest in the RFID topic".

He goes to reception and before he says a word, the receptionist reads

a message on the monitor screen. This message, alongside a photo of Tom,

indicates to this employee that the man in the photo is looking for an

invoice. Tom continues to be surprised by it all.

That afternoon, after reading the virtual boards quickly, Tom makes

his way to the session room in which he has to present his paper. He can

see the person in charge of chairing the session talking to some people

and looking at her PDA to check that all the speakers are in the room.

The chairperson calls Tom and he approaches the board. When he is near

it his presentation can be seen, and his colleague reminds him that with

a movement of his hand near the screen he can pass slides.

The above is a brief description of a conference scenario.

2.2 An Ontology for visualization Based Services

Once the scenario has been described, the next step is to define the

corresponding ontology for context awareness to understand all the concept

contexts mentioned before. Dogac propose the creation of context ontology

to achieve a better understanding of ambient intelligence scenarios [10].

At this point we define the important aspects of our scenario as follow:

Entity:

Dey defines an entity as "a person, place, or object that is considered

relevant to the interaction between a user and an application" [11].

For us all entities have to be identified and located, including, of

course, to the user him/herself.

User:

It is an active entity requiring and consuming services that interact

implicitly with intelligent environments that is aware of its identity,

profile, schedule, location, preferences, social situation and activity.

Profile:

This is a significant user aspect being important for context-aware information.

Dockhorn structures the user profile in five blocks: Identity (Id., Name,

etc.), Characteristics (male/female, place of birth), Preferences (colours,

food, etc.), Interests (music, sports) and History (record of actions)

[15].

Schedule:

This is the calendar representation of predictable activities, contacts,

reminders and important user dates. Also it makes it possible to plan the

services demanded by users.

Service:

This can be said to be a benefit that satisfies some user's needs.

Services at different levels of abstraction can be defined. In our context

we are modelling the generic visualization service integrating multiple

services studied at small levels of abstraction.

Identification:

It refers to an active and transparent process for recording a single

user's identity. In our case study the identification process involves

a state of latent awareness waiting to detect new presences near the RIFD

readers embedded in the environments.

Location:

This is a service to ascertain the relative location of a relevant

entity. It is a relative position since, in our case study, the absolute

position is not interest and in addition, it is determined by a recognized

area.

Customized contents:

These contents are user information which are structured and adapted according

to the profile and situation.

The contents shown through the visualization service can be different

for diverse users working at the same time in the vicinity of the visualization

devices. It is due to this that it is necessary to focus on the contextual

information related to the nearby users and also to use techniques to optimize

the distribution of, and time for, contents.

Property: priority (main or secondary -> quantified)

Property: Size

Distribution:

We need to establish the most opportune situation of the contents,

according to the user profiles, contexts, schedules and time.

Work related: To expand, to contract and to move.]

Latency (property of contents).- Cyclic, Time, Ending:

In the visualization service we define latency as the time from when content

is published to the moment that it is eliminated and in fact stops being

visualized. Those contents that do not adapt to the circumstances or conditions

of the moment (the present users' situation) should be deleted.

Structuring:

This is the identification and classification of different parts of

the contents.

Relationship:

It refers to the connection or correspondence of contents with others.

Knowing that they can be supplemented allows more direct, precise and concise

information.

Adaptability: This is the ability to show contents in a suitable

time to the present users, context and schedule. Work related: To make

information appear and disappear.

All of these concepts can be adapted to the modelling of our context,

and to this end we have adapted the RFID technology that facilitates our

interaction (the user is only required to carry on his person a card similar

to a credit card without the necessity of having to take it out when entering

a room or approaching a board) [12,13,14,15].This

adaptation is based on the ontology terms previously discussed, as entries

to the system. With these entries it is easy to obtain services through

visualization.

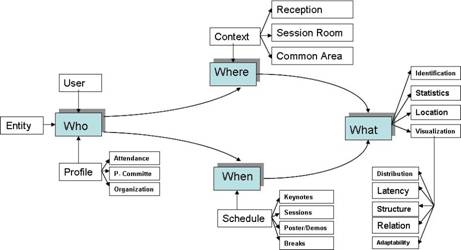

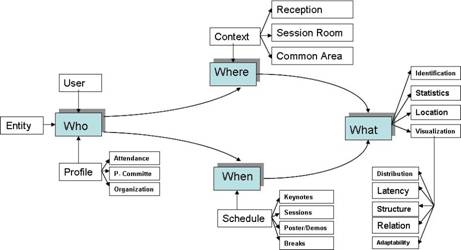

Figure 1: The context concepts in the conference site

Figure 1 schematizes these ontology terms and their

relationship with the conference context. We can see the different profiles:

Attendance, Program Committee and Organization). There are three contexts:

Reception, Session Room and Common Area. In the last one, some of the visualization

services such as News, Notices, Poster and Demos are shown on the board.

The Schedule, with the Conference Program, includes the session program,

poster / demo of the session and breaks. Lastly, the different services

are showed in the "what" concept: Identification, Attendance

Statistics, Location and Visualization. Also, the characteristics referred

to the Ontology are shown.

3 The Conference Site

3.1 The RFID technology

RFID technology allows us to identify people and objects easily without

explicit user interaction. Within it, there are three kinds of clearly

differentiated objects: the reader (reader or transceiver, with antenna),

the label (tag or transponder) and the computer. This small device, the

tag, contains a transponder circuit that, in the case of the passive tags,

takes the wave energy, emitted continuously by the reader when reading

and writing the information that they can contain. The passive tags can

store over 512 bytes, including the identification number and other kinds

of user information.

This technology offers important advantages over and against the traditional

bar codes. Some of these advantages are that the labels do not have to

be visible to be read, the reader can be located at a distance of a meter

(in passive tags), the tags can be reused and the reading speed is over

30 labels per second. Security in RFID is guaranteed. The information is

transmitted in an encrypted way and only the readers with permission to

do so will be able to manipulate the data.

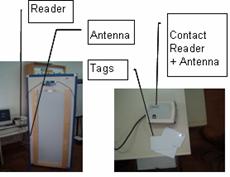

Figure 2: RFID devices

Figure 2 shows two types of devices. The one on

the left shows a reader and an antenna with a read-and-write capability

range of over 75 cm. This has been especially designed for its location

on classroom doors, or near boards. It can read several labels simultaneously,

when identifying people entering the classroom. It can also identify the

teacher or the students who may be approaching the board. The one on the

right is a contact reader including an antenna with a range of only 10

cm. A model of the tag is also shown. This identification system is designed

particulary for individual use.

We are using another kind of RFID set, allowing more distance between

the reader and the tags (2 or 3 meters) see figure 2 on the right. Entry

to and exit from each context will also be controlled. This system is called

HFKE (Hands Free Keyless Entry) and has a semi-passive tag using a battery

along with 32 Kbytes of EPROM memory for the user's data. The reader (HFRDR)

transmits waves of low frequency 125 Khz continuously. When a tag detects

this wave it activates the microcontroller sending the required information

in UHF frequency. There is also another device called HFCTR. It is a control

unit for up to eight readers within a distance of up to 1000 meters. This

unit is connected to the network via TCP/IP.

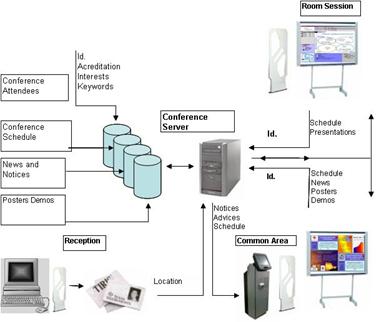

3.2 The Architecture

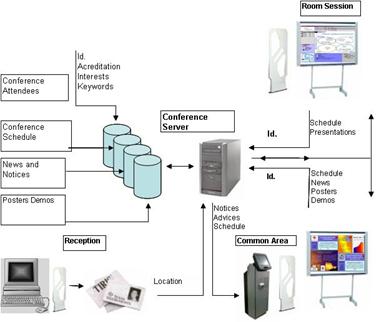

The server manages includes the necessary information for the conference:

data in relationship to attendances, schedule, news, notices, poster and

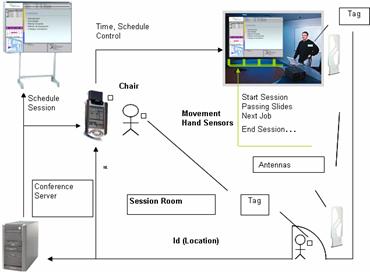

demos as we can see in Figure 3.In addition every conference

site is equipped with RFID devices: readers and antennas. Also de visualization

devices such as boards and kiosks are shown in the Room Sessions and the

Common Area. Other devices such as PDA for chairs are needed.

Figure 3: Architecture for conference context

3.3 The reception

The main process in this context is wearing of identification tags for

conference-goers. All this time some information registering the profile

of the person attending the conference is stored in every tag. Data about

affiliation, keywords, lines of research, interest, code of the presentation

for speaker attendance, etc. are essential to complement the identification

process.

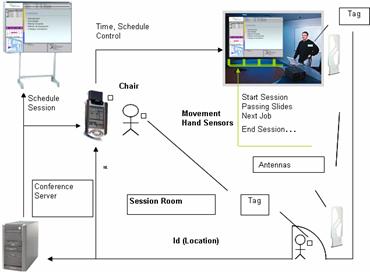

3.4 The Session Room

The session room could be considered the most important context in the

conference site. We have modelled the different processes produced in it

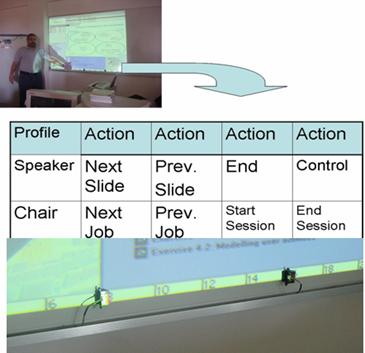

as the Figure 4 shows. If a person attending the conference

passes near the door's antenna, the system captures his/her identification

by means of the reader. Also other information such as the profile, code

for presentation, etc. it is available at this moment in time. Once this

information is in the server it is transmitted to the session's chairperson,

aided by PDA, and from this moment, he/she knows if this person is going

to make a presentation. In addition, the session attendance statistics

are updated.

The chairperson starts the session when he/she has checked that the

presenters of all the papers are in attendance. Then he/she, with a simple

movement of the hand near a sensor placed below the board, enables the

session to commence. This is possible through the combination of the identification

by the antenna placed next to the board and the profile of the chairperson

stored in the tag.

Figure 4: The session room

Another possibility is produced when the first presenter approaches

the board. In this case the combination of the identification, the profile

and the code of the presentation included in the tag with the context,

schedule and time information, causes the presentation to appear on the

board. The presenter controls the pass of each slide by passing a hand

near the corresponding sensor.

Once the presenter has finished, the chair person approaches the board

to facilitate the presentation of the next job. This process could be optional

because it is possible to make it by the information of the context, schedule,

time and the control of the previous presentation has concluded.

The final process is to finish the session. This occurs when the chairing

person approaches the board and passes his/her hand near the corresponding

sensor. It is important to keep in mind the combination of the identification

and the information contained in tag. Also, the information about context,

schedule, time and the control of sensors are key for manages the presentation

of information on the board easily.

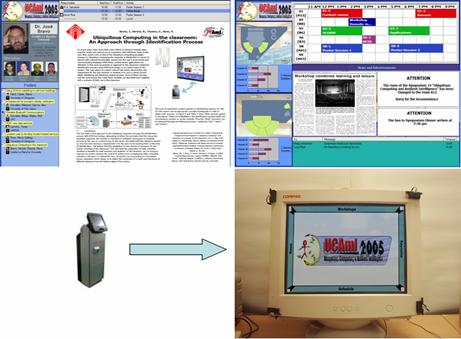

3.5 The Common Area

In this room conference-goers obtain information about the visualization

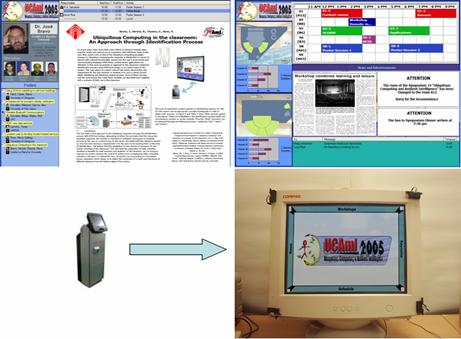

services. There are two kinds of devices: boards and kiosks, equipped with

antennas and readers.

Figure 5 shows one of the boards on the top right

on which people can observe news and notices about the event. The news

appears on the board when someone enters the room and the notices when

someone approaches it. Also the attendance statistics and the maps for

each room can be visualized.

Figure 5: Common Area devices

Other actions in this room are the session posters and demos. Posters

are shown when the author approaches the board in conference-schedule time.

This mosaic is presented on the top left of Figure 5.

Demos are presented usually in PC's situated in this area and equipped

with contact readers. In addition to this, they have time for a presentation

depending on the schedule. The presenter only has to pass his tag near

the contact reader.

The kiosk displays the same information but includes specific messages

which can only be read by the user concerned. The interaction is through

sensors located across the monitor, managing menu options when a hand passes

near them. In addition the location process is facilitated from kiosks.

Once the user has selected this option he/she can manages a virtual keyboard

with sensors in order to locate people in any of the rooms of the conference

site. Also, the system provides the users with information about other

conference goers with similar interests.

The whole process uses the combination of identification and sensors

as previously mentioned.

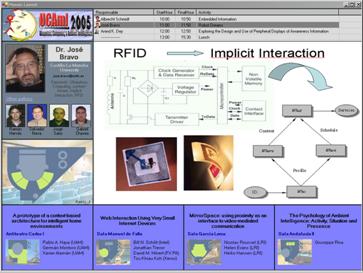

4 The Visualization Services: Mosaics

We have tried to materialize the implicit output offering information

to the users by means of a visualization mosaic. In this, it is desirable

that the information appears automatically when identification has been

produced. Thus, in accord with the concept of "who" mentioned

before, knows how to display mosaics, placing different kinds of information

in each pane the mosaic is made up of. Some aspects such as pane size,

location in the mosaic, priority according to profile, latency of information,

etc. are critical features in achieving an optimum visualization.

Figure 6 shows one of the possible session room

mosaics. The work is presented in the main area, the large one on the right.

Other aspects such as the author's name, university, qualifications, rank

and affiliation, and all the session programs are also presented.

This mosaic is adaptable and the main area could take up the whole information

area, with reference to other rooms only appearing in the area below the

graphics plant and, below that, some information when a change is produced

in these rooms.

The main area can work in relation to others. For example, once the

author begins to present his work, the area of the author's details could

change and the work program could be shown in that part.

The mosaic changes automatically showing information about conference

news and messages when the session finishes. The news appears when anyone

is in the room and is repeated in a continuous cycle. Individual announcements

are shown when the conference-attendees approach the board (proximity).

Figure 6: The Session Room Mosaic

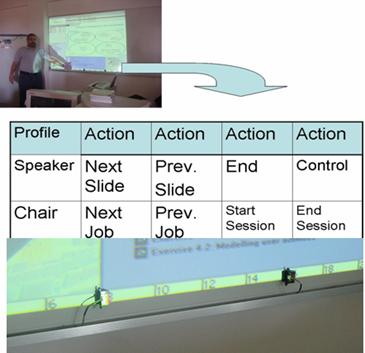

4.1 RFID Sensor Fusion

As we have mentioned before, we complement the implicit interaction

through RFID by some sensors placed below the board. In our prototype we

have four sensors with different functionalities. There are two kinds of

interaction: by passing a hand quickly near the sensors or holding it there

for a few seconds.

Also, the user can get his/her sensor functionality included in his/her

tag. When the system read the identification number, it can also read the

profile and, include in it, the combination of sensor actions and hand

movements.

Figure 7 shows the board with four sensors and an

example for two profiles: the speaker and the chair. In the first one it

is possible to see actions which habitually occur when presenting a paper

such as passing slides, finishes the presentation and, finally, the control

when the speaker needs to interact in another way with his/her presentation.

The actions for the chair are also the characteristically such as starting

session, the control of each paper to be presented and the end of the session.

Figure 7: RFID sensor functionality

5 Conclusions

We have presented a context-aware application for a conference site

through identification by RFID. The main goal is to achieve natural interfaces

in daily activities for this context making a function possible with just

the requirement of wearing tags. The interaction is very simple because

it is embedded in the user's actions such as passing into a room or approaching

a board. At this moment the system offers some services automatically and

unobtrusively.

Furthermore we have presented a complement for the implicit or embedded

interaction with a set of sensors placed near the visualization devices

initiated by simply passing a hand near them.

Acknowledgments

This work has been financed by a 2005-2006 SERVIDOR project (PBI-05-034)

from Junta de Comunidades de Castilla-La Mancha and the Ministerio de Ciencia

y Tecnología in Mosaic Learning project 2005-2007 (TSI2005-08225-C07-07).

References

[1] ISTAG, Scenarios for Ambient Intelligence in

2010. Feb. 2001. http://www.cordis.lu/ist/istag.htm.

[2] Dey, A. (2001). "Understanding and Using

Context". Personal and Ubiquitous Computing 5(1), 2001, pp. 4-7.

[3] Kevin Brooks (2003). "The Context Quintet:

narrative elements applied to Context Awareness". In Human Computer

Interaction International Proceedings, 2003, (Crete, Greece) by Erlbaum

Associates, Inc.

[4] Schmidt, A. (2000). "Implicit Human Computer

Interaction Through Context". Personal Technologies Volume 4(2&3)

191-199.

[5] Schmidt, A. (2005). "Interactive Context-Aware

Systems. Intering with Ambient Intelligence". In Ambient Intelligence.

G. Riva, F. Vatalaro, F. Davide & M. Alcañiz (Eds.).

[6] Schmidt, A., M. Kranz, and P. Holleis. Interacting

with the Ubiquitous Computing Towards Embedding Interaction. in Smart

Objects & Ambient Intelligence (sOc-EuSAI 2005). 2005. Grenoble, Francia.

[7] Want, R. & Hopper, A. (1992). "The Active

Badge Location System". ACM Transactions on Information Systems, 10(1):91102,

Jan 1992.

[8] Daniel M. Russell, Jay P. Trimble, Andreas Dieberger.

"The use patterns of large, interactive display surfaces: Case studies

of media design and use for BlueBoard and MERBoard". In Proceedings

of the 37th Hawaii International Conference on System Sciences 2004.

[9] Donna Cox, Volodymyr Kindratenko, David Pointer

In Proc. of 1st International Workshop on Ubiquitous Systems for Supporting

Social Interaction and Face-to-Face Communication in Public Spaces, 5th

Annual Conference on Ubiquitous Computing, October 12- 4, 2003, Seattle,

WA, pp. 41-47.

[10] Dogac, A., Laleci, G., Kabak, Y.,"Context

Frameworks for Ambient Intelligence". eChallenges 2003, October 2003,

Bologna, Italy.

[11] Dey, A., Abowd, G. Towards a better understanding

of Context and Context-Awareness. CHI 2000 Workshop on the What, Who, Where,

When, Why and How of Context-Awareness, April 1-6, 2000.

[12] José Bravo, Ramón

Hervás, Inocente Sánchez, Agustin

Crespo. "Servicios por identificación en el aula

ubicua". In Avances en Informática Educativa. Juan Manuel

Sánchez et al. (Eds.). Servicio de Publicaciones —

Universidad de Extremadura. ISBN84-7723-654-2.

[13] Bravo, J., R. Hervás, and G. Chavira,

Ubiquitous computing at classroom: An approach through identification process.

Journal of Universal Computer. Special Issue on Computers and Education:

Research and Experiences in eLearning Technology. 2005. 11(9): p. 1494-1504.

[14] Bravo, J., Hervás, R., Chavira, G.,

Nava, S. & Sanz, J. (2005). "Display-based services through identification:

An approach in a conference context". Ubiquitous Computing & Ambient

Intelligence (UCAmI'05). Thomson. ISBN:84-9732-442-0. pp.3-10.

[15] Bravo, J., Hervás, R., Chavira, G. &

Nava, S. "Modeling Contexts by RFID-Sensor Fusion". Accepted

paper from 3rd Workshop on Context Modeling and Reasoning (CoMoRea 2006).

Pisa (Italy).

|